For the last decade, we’ve trained artificial intelligence to imitate us—our language, our patterns, our preferences. This approach has powered remarkable systems like large language models (LLMs), giving us tools that can write essays, summarize legal texts, and even debug code. But as impressive as these systems are, they’re reaching their limit.

That’s the message from David Silver and Richard Sutton—two legends in the field—in their recent paper, Welcome to the Era of Experience. They argue convincingly that if AI is to move beyond mimicry and into true innovation, it needs more than human data. It needs its own experience.

This shift isn’t just about new capabilities. It demands a rethink of how we design, monitor, and govern AI.

The Core Idea: Learning From Experience, Not Just Data

Today’s most advanced models rely on static datasets—Wikipedia, Stack Overflow, Reddit. They’re trained to predict the next word, not to explore or reason in the world. That’s a problem. As the paper points out, “most of the high-quality human data has already been consumed.” We’re near the ceiling of what this approach can offer.

In contrast, the next generation of AI will be experiential agents. These aren’t prompt-bound tools. They’re systems that act in the world, observe outcomes, learn from feedback, and adapt over time. They’ll be governed by streams of experience, not isolated queries.

Silver and Sutton envision agents that:

- Retain memory across interactions

- Pursue long-term goals

- Learn from real-world signals (not just human ratings)

- Act independently in digital and physical environments

The model isn’t just “chatting.” It’s living, in a way—accumulating experience and knowledge through continuous interaction.

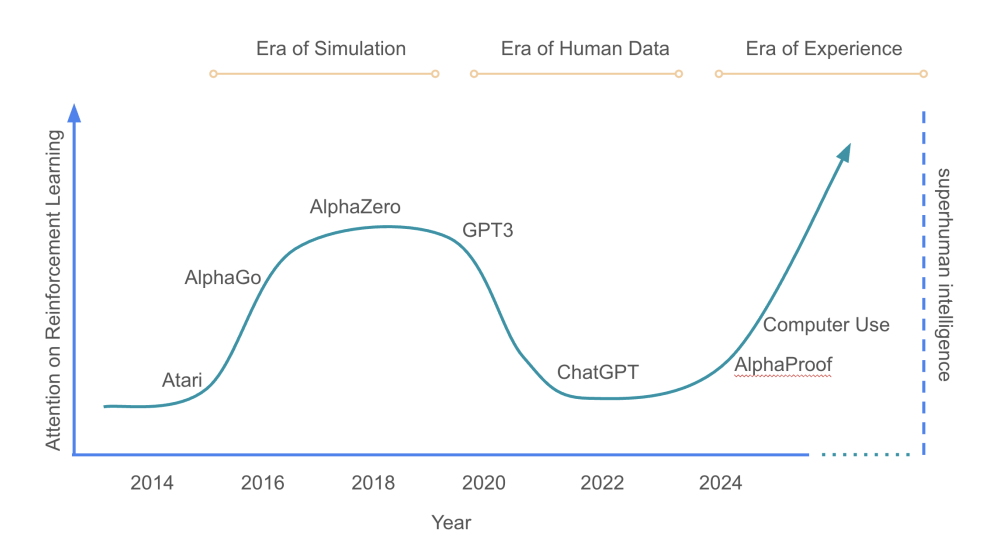

The Return of Reinforcement Learning

This approach revives reinforcement learning (RL) as the central pillar of AI progress. RL was key to systems like AlphaGo and AlphaZero, which didn’t learn from data—they discovered winning strategies by playing games against themselves millions of times.

But RL was sidelined as LLMs took over. The new models were broader, trained on everything at once. But something was lost in the process: the ability to discover new knowledge from the ground up.

Now, with experience-based agents, RL is back. And it’s bigger.

Agents won’t just explore simulated games. They’ll navigate open-ended environments, guided by reward signals from the world—anything from carbon emissions to heart rate, from exam scores to stock prices.

Why This Changes Everything for Compliance

From a compliance and governance standpoint, this is the real inflection point—not generative AI, but adaptive AI.

Because if a model can:

- Change its behavior after deployment

- Set or influence its own goals

- Act autonomously across systems and interfaces

…then traditional safeguards won’t cut it.

We need to answer hard questions:

- How do we audit models that learn and evolve continuously?

- What defines misalignment in a system with changing objectives?

- How do we contain risks when feedback loops span months or years?

Reward functions, once hard-coded, may now be dynamic and user-specific. That flexibility is powerful—but it also makes outcomes harder to predict and explain.

There Are Safety Gains, Too

To their credit, Silver and Sutton don’t ignore the risks. They acknowledge that longer-term autonomy can reduce human oversight and create new failure modes. But they also argue that experience brings unique safety advantages.

For example:

- Experiential agents can adapt to environmental change (like a pandemic or hardware failure).

- They can adjust their behavior if users show dissatisfaction or discomfort.

- They can evolve goals incrementally—avoiding brittle, catastrophic misalignment.

In some ways, this is closer to how humans manage risk: through observation, iteration, and course correction. But it still requires a framework of accountability.

The Road Ahead: Compliance Must Move With the Technology

The era of experience is coming. It’s already arriving in pockets—agents that browse the web, execute code, or simulate long-term planning. These systems won’t stay constrained for long.

As AI leaders and compliance professionals, we have a responsibility to stay ahead of this shift. That means:

- Building standards for continuous oversight

- Designing reward systems that balance autonomy with human values

- Developing transparency tools for evolving models

- Creating protocols for human override—even in persistent learning loops

Compliance can’t be static when AI is dynamic.

Final Thought

The next chapter in AI isn’t about more data. It’s about more learning. Not from us—but from the world.

As AI agents grow more autonomous, the real challenge isn’t intelligence. It’s trust, alignment, and governance.

We’re not just building smarter systems. We’re building systems that decide how to get smarter on their own. That’s a capability—and a responsibility—we can’t take lightly.

Leave a Reply